Learning-To-Learn: RNN-based optimization

Around the middle of June, this paper came up: Learning to learn by gradient descent by gradient descent. For someone who’s interested in optimization and neural networks, I think this paper is particularly interesting. The main idea is to use neural networks to tune the learning rate for gradient descent.

Summary of the paper

Usually, when we want to design learning algorithms for an arbitrary problem, we first analyze the problem, and use the insight from the problem to design learning algorithms. This paper takes a one-level-above approach to algorithm design by considering a class of optimization problems, instead of focusing on one particular optimization problem.

The question is how to learn an optimization algorithm that works on a “class” of optimization problems. The answer is by parameterizing the optimizer. This way, we effectively cast algorithm design as a learning problem, in which we want to learn the parameters of our optimizer (, which we call the optimizee parameters.)

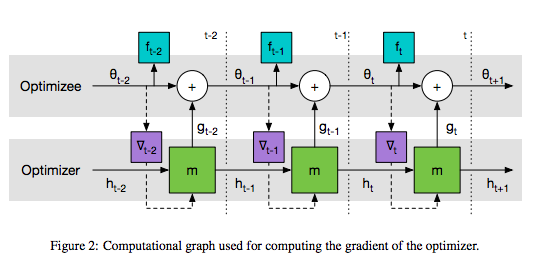

But how do we model the optimizer? We use Recurrent Neural Network. Therefore, the parameters of the optimizer are just the parameters of RNN. The parameters of the original function in question (i.e. the cost function of “one instance” of a problem that is drawn from a class of optimization problems) are referred as “optimizee parameters”, and are updated using the output of our optimizer, just as we update parameters using the gradient in SGD. The final optimizee parameters will be a function of the optimizer parameters and the function in question. In summary:

where is modeled by RNN. So is the parameter of RNN. Because LSTM is better than vanilla RNN in general (citation needed*), the paper uses LSTM. Regular gradient descent algorithms use .

RNN is a function of the current hidden state , the current gradient , and the current parameter .

The “goodness” of our optimizer can be measured by the expected loss over the distribution of a function , which is

(I’m ignoring in the above expression of because in the paper they set .)

For example, suppose we have a function like . If are drawn from the Gaussian distribution with some fixed value of and , the distribution of the function can be defined. (Here, the class of optimization problem is a function where are drawn from Gaussian.) In this example, the optimizee parameter is . The optimizer (i.e. RNN) will be trained by optimizing functions which are randomly drawn from the function distribution, and we want to find the best parameter . If we want to know how good our optimizer is, we can just take the expected value of to evaluate the goodness, and use gradient descent to optimize this .

After understanding the above basics, all that is left is some implementation/architecture details for computational efficiency and learning capability.

(By the way, there is a typo in page 3 under Equation 3; should be . Otherwise it doesn’t make sense.)

Coordinatewise LSTM optimizer

The Figure is from the paper : Figure 2 on page 4

To make the learning problem computationally tractable, we update the optimizee parameters coordinate-wise, much like other successful optimization methods such as Adam, RMSprop, and AdaGrad.

To this end, we create LSTM cells, where is the number of dimensions of the parameter of the objective function. We setup the architecture so that the parameters for LSTM cells are shared, but each has a different hidden state. This can be achieved by the code below:

lstm = tf.nn.rnn_cell.BasicLSTMCell(hidden_size)

for i in range(number_of_coordinates):

cell_list[i] = tf.nn.rnn_cell.MultiRNNCell([lstm_cell] * num_layers) # num_layers = 2 according to the paper.Information sharing between coordinates

The coordinate-wise architecture above treats each dimension independently, which ignore the effect of the correlations between coordinates. To address this issue, the paper introduces more sophisticated methods. The following two models allow different LSTM cells to communicate each other.

- Global averaging cells: a subset of cells are used to take the average and outputs that value for each cell.

- NTM-BFGS optimizer: More sophisticated version of 1., with the external memory that is shared between coordinates.

Implementation Notes

Quadratic function (3.1 in the paper)

Let’s say the objective funtion is , where the elements of and are drawn from the Gaussian distribution.

(as in ) has to be the same size as the parameter size. So, it will be something like:

g, state = lstm(input_t, hidden_state) # here, input_t is the gradient of a hidden state at time t w.r.t. the hiddenAnd the update equation will be:

param = param + gThe objective function is:

The loss can be computed by double-for loop. For each loop, a different function is randomly sampled from a distribution of . Then, will be computed by the above update equation. So, overall, what we need to implement is the two-layer coordinate-wise LSTM cell. The actual implementation is here.

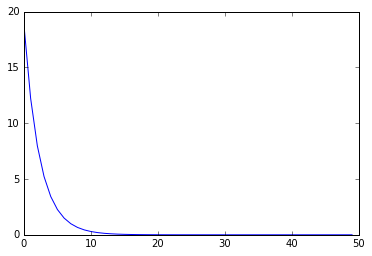

Results

I compared the result with SGD, but SGD tends to work better than our optimizer for now. Need more improvements on the optimization…